Written by: krane, lamby (Asula), sylve, lancelot (Hyle)

A big thank you to our reviewers: Aaron Elijah Mars, Alex Sirac, Arthaud Mesnard, Voynich, François Garillot, Jacob Everly, Jeremy Bruestle, Nuke, Prince Sinha, James Parillo, Peteris Erins, Patrick O’Grady, Phil Kelly, Polynya, Rajiv Patel O’Connor, Raul Kripalani, Teemu

Introduction

Over the last week, we’ve seen multiple proposals for the roadmap of Ethereum’s Consensus Layer. Most notably, Justin Drake laid out his vision for the ZK era of Ethereum in his talk at Devcon 2024. Dubbed the beam chain or the beam fork, it batches a number of big ticket upgrades for Ethereum, including lowering slot times, faster finality and the ‘snarkification’ of Ethereum’s consensus. The proposal has been met with mixed reactions around its ambition and the timeline for these changes. However, we should also acknowledge how important caution should be for Ethereum given the size of its economy. Despite this acknowledgement, it is useful to consider what a maximally ambitious future of the base layer for a rollup-centric ecosystem can be. In this spirit of “what can be, unburdened by what has been”, this article covers one such future that utilizes advancements in ZK and consensus research.

We’ll begin by examining base layers from first principles, after which we’ll explore core concepts in consensus research. Lastly, we’ll dive into how this research can be applied to a new generation of base layer designs especially in a ZK regime.

Base Layers

Today, most rollups employ centralized sequencers that order and execute transactions. Once the sequencer has produced a block, it is responsible for also producing a proof of the execution for others to verify. For execution to be verifiable, third-parties need state data for the rollup along with this proof of execution. State data and proofs are normally posted to a Data Availability (DA) Layer and state transitions are verified by a Verification Layer (more commonly referred to by their misnomer of Settlement Layer).

In the early days, Ethereum laid out the rollup-centric roadmap and was the original base layer, performing both DA and verification. Ethereum’s unique state (i.e. large set of valuable assets issued on Ethereum) made it a natural verification or settlement layer for rollups. By using Ethereum as a base, rollups would not only inherit its security but also its liquidity. Irrespective, there were no specialized settlement or DA options on the market at the time.

Even in today’s world with many specialized layers, Ethereum with the largest PoS validator set and blob-support, is a very secure option as a DA layer. Moreover, the number and market cap of the family of assets on Ethereum has continued to grow. Since “settlement” is asset-specific, for a rollup to allow forced exits, verification must be performed on the chain that issues the asset. If a rollup wants to allow forced exits for Ethereum-issued assets, it must use Ethereum for verification.

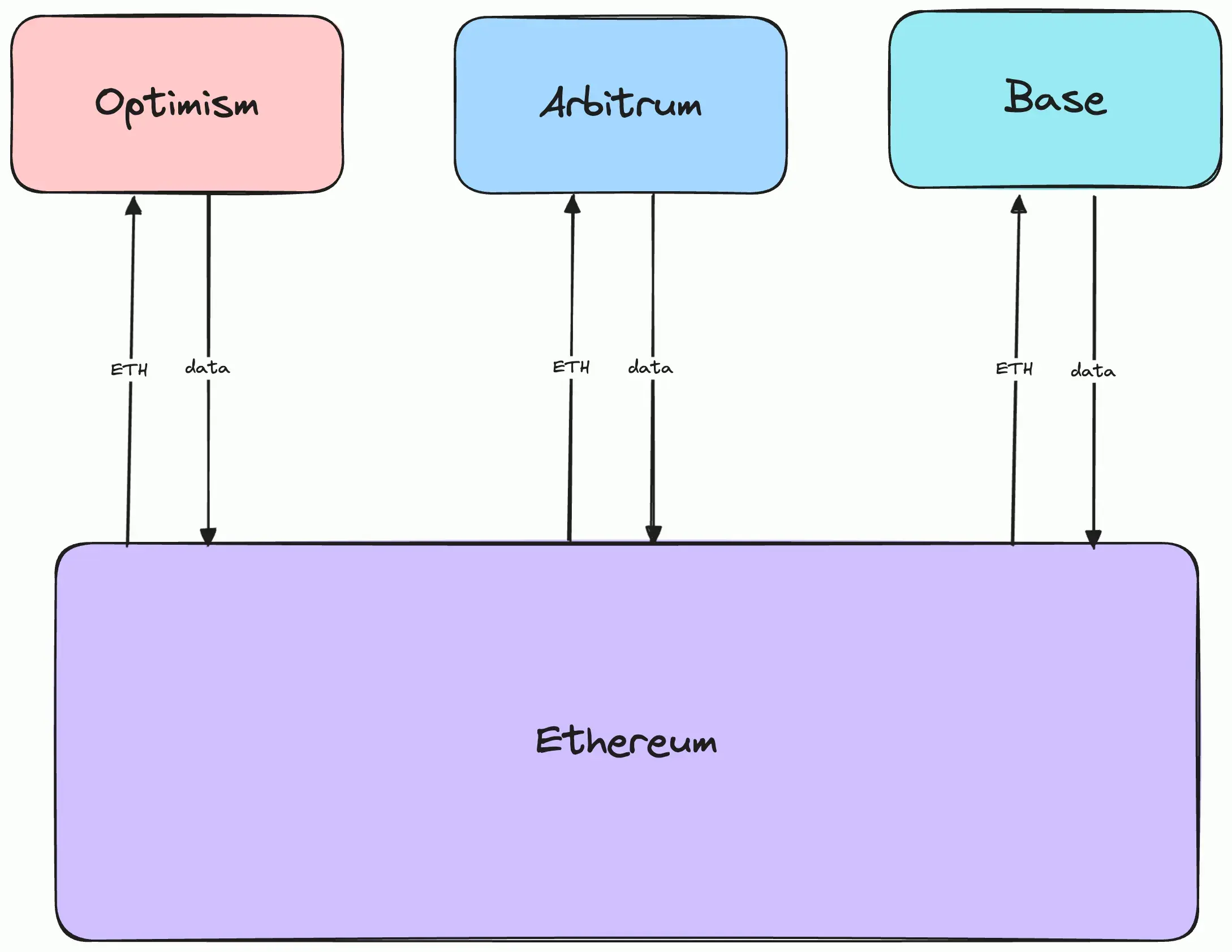

Today Ethereum looks something like:

Ethereum today.

However, it is also true that specialized DA layers and settlement layers are competing directly with Ethereum to perform these operations. For example, Celestia and EigenDA already provide significantly higher DA throughput (albeit with different security models). Similarly, Initia is extending the idea of a verification or settlement hub by providing oracles, unified wallet experiences and built-in interoperability to enable a more seamless experience for users within the ecosystem (this has also become an important point on the Ethereum roadmap over the last few months).

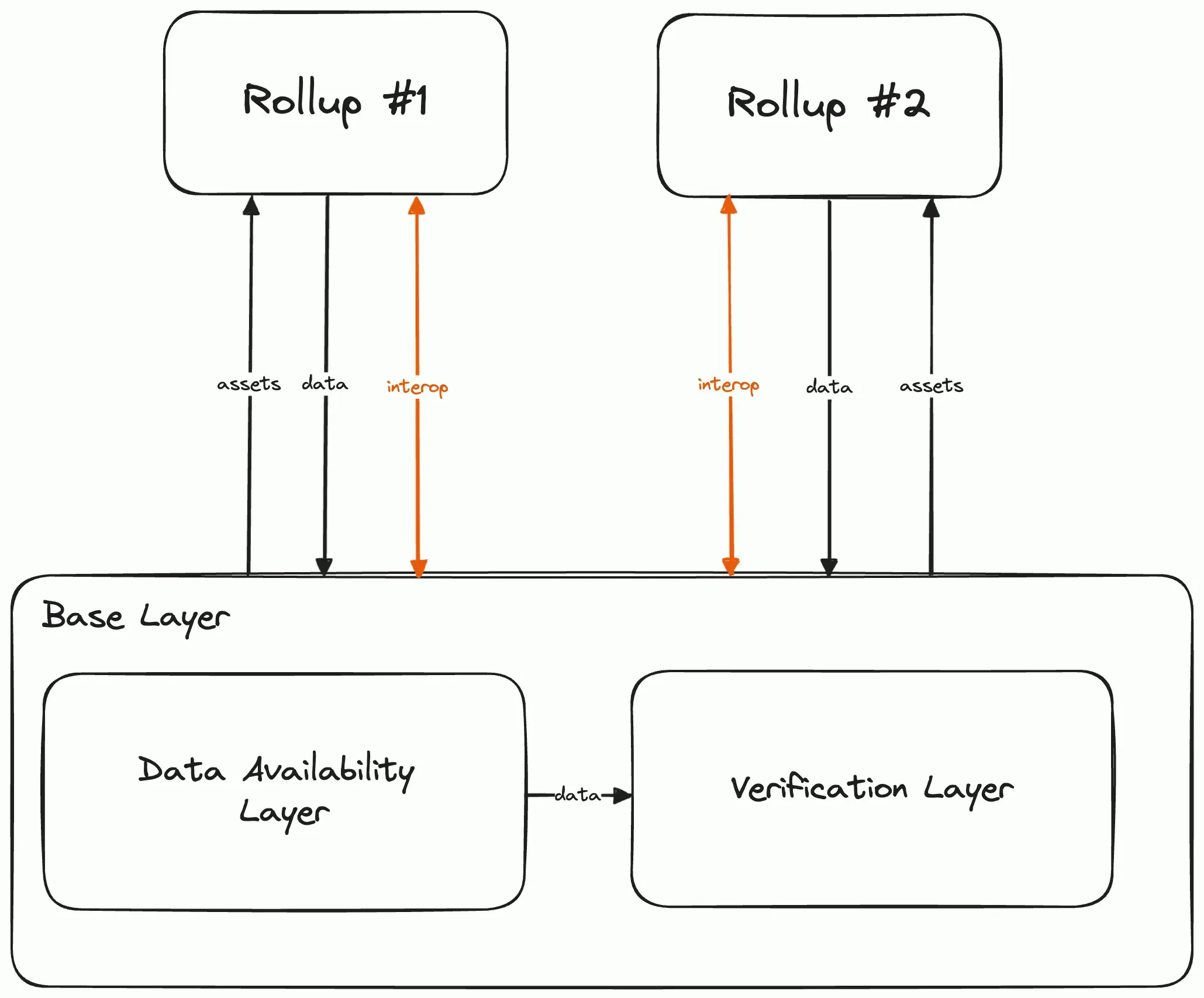

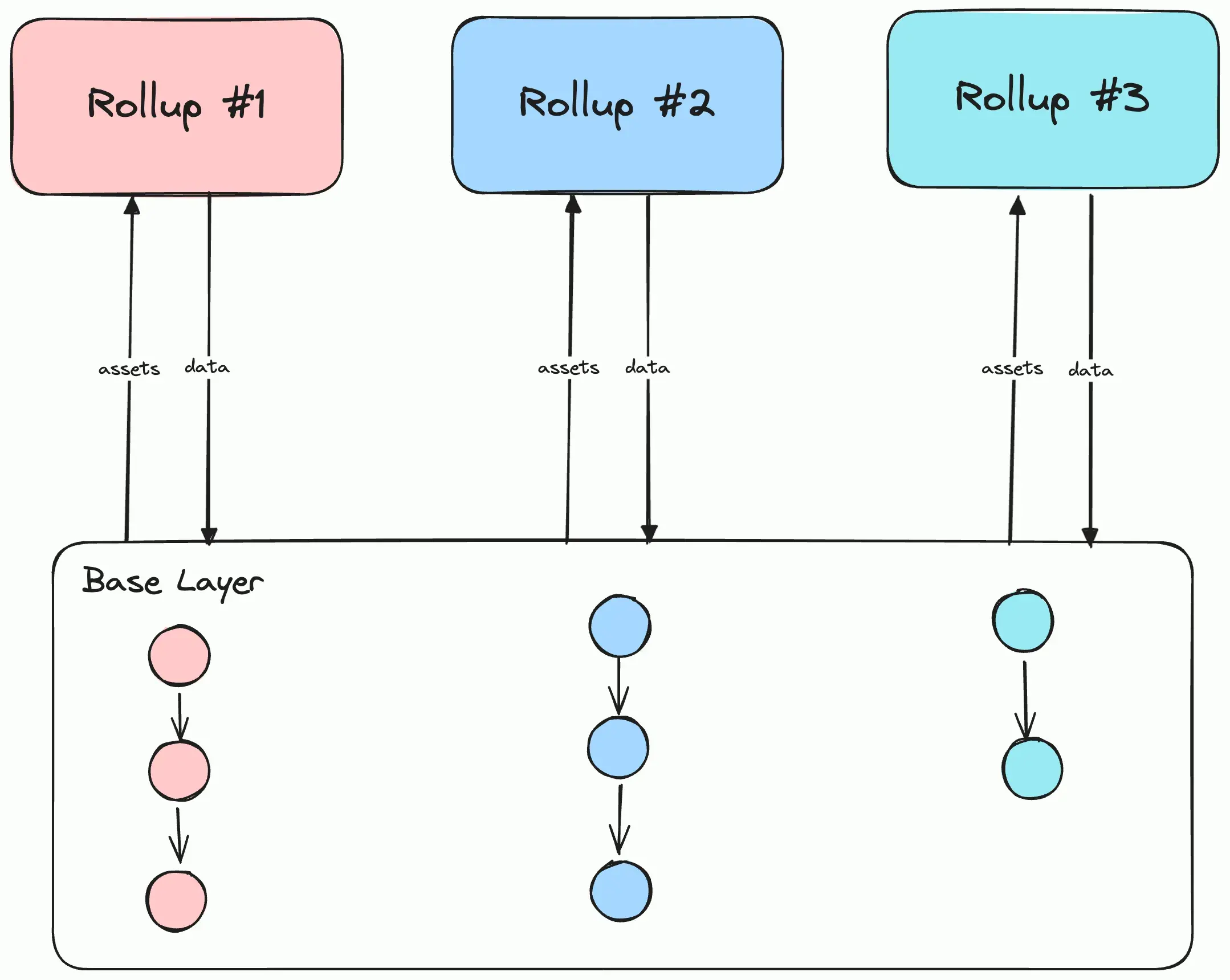

All of these systems take the same shape as Ethereum, with the base layer being decoupled into Data Availability and Verification, each serving as a specialized hub for their respective operations:

Rollups with specialized DA and verification layers

The key insight in newer designs lies in the separation of optimizations that DA layers and verification layers must make. The original role of a blockchain was to decentralize the trusted third-party between two distrusting counterparties. In a rollup-centric system, the role of the base layer is to act as a decentralized trusted third party between rollups to enable interoperability between them. Once the base layer verifies the state of a rollup, all other rollups can implicitly trust the base layer. The other core property of the rollup-centric design is that it allows applications to provide users with fast and cheap access to transaction perconfirmation in the average case (via somewhat centralized sequencers) without compromising on eventual censorship resistance in the worst case (via forced exits on the base layers).

Given we understand the separation between data availability and verification alongside the core functionality of a base layer in providing eventual censorship resistance, interoperability between rollups, and asset issuance, we can reason about how to build a better base layer. Currently, rollups post state data to the base layer every few hours, meaning preconfirmations provided by the rollup sequencer only finalize on the base at this timescale. A base layer with higher data throughput than Ethereum L1 can allow rollups to post data more often, decreasing the time from rollup preconfirmations to base layer confirmations and thus increasing the security of the rollup. Similarly, verification happening at a higher clip enables faster interoperability between rollups, eliminating the need for liquidity bridges and market makers. We can utilize specific insights on the shape of workloads a base layer has to handle to build base layers with higher throughput and faster inter-rollup communication.

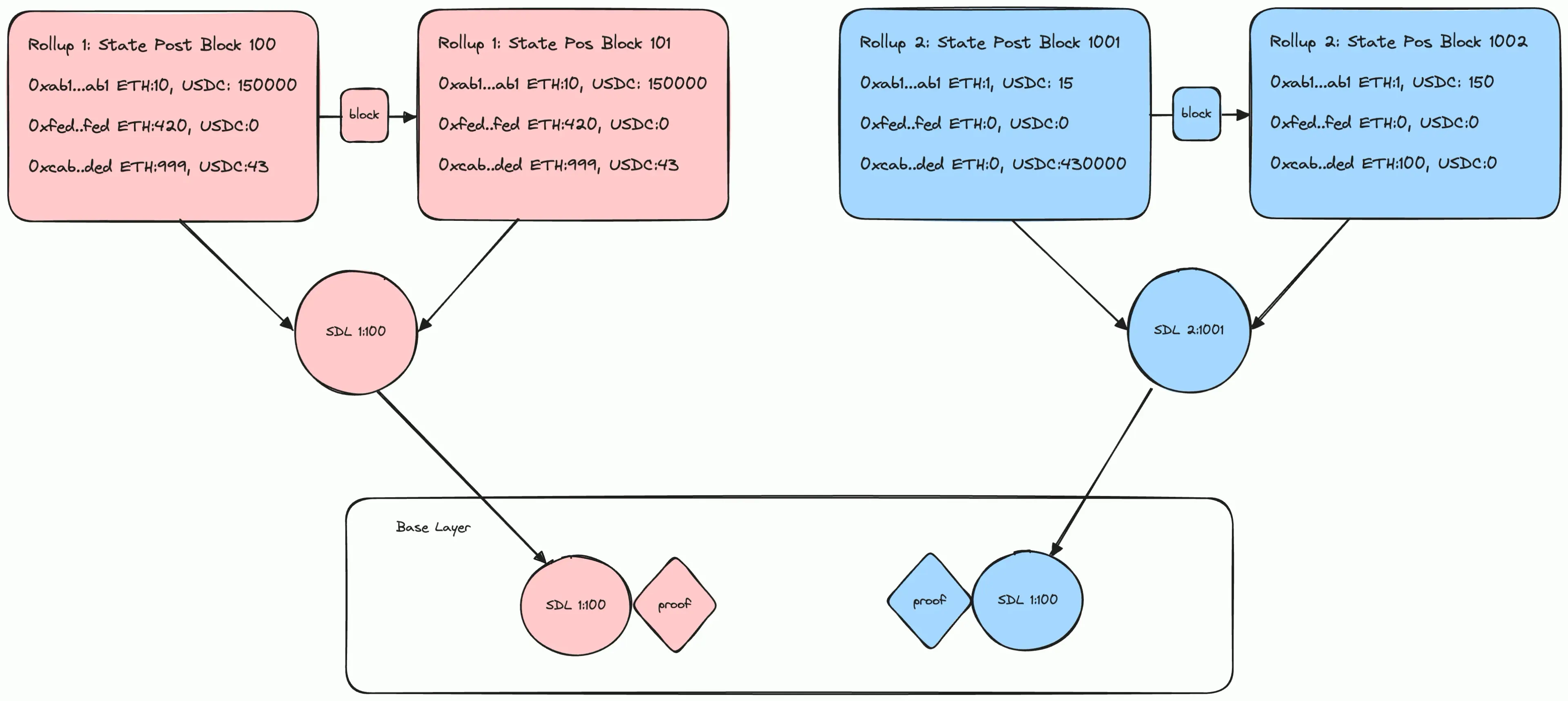

Integrated blockchains have pockets of “hot state” like DEX pools that get hit often. This makes the relative ordering of transactions from all participants important. On the other hand, rollups typically operate on largely independent state spaces, with most transactions only affecting state within their own rollup. While cross-rollup interactions do occur (for example, when users transfer assets between rollups or when rollups compose with each other), these interactions are explicit, well-defined and known ahead of time. As the vast majority of transactions within each rollup operate on disjointed state and cross-rollup transactions are handled through specific interoperability mechanisms, there is less need for strict total ordering of all rollup data on the base layer. Instead, ordering can be enforced selectively only where rollups explicitly interact:

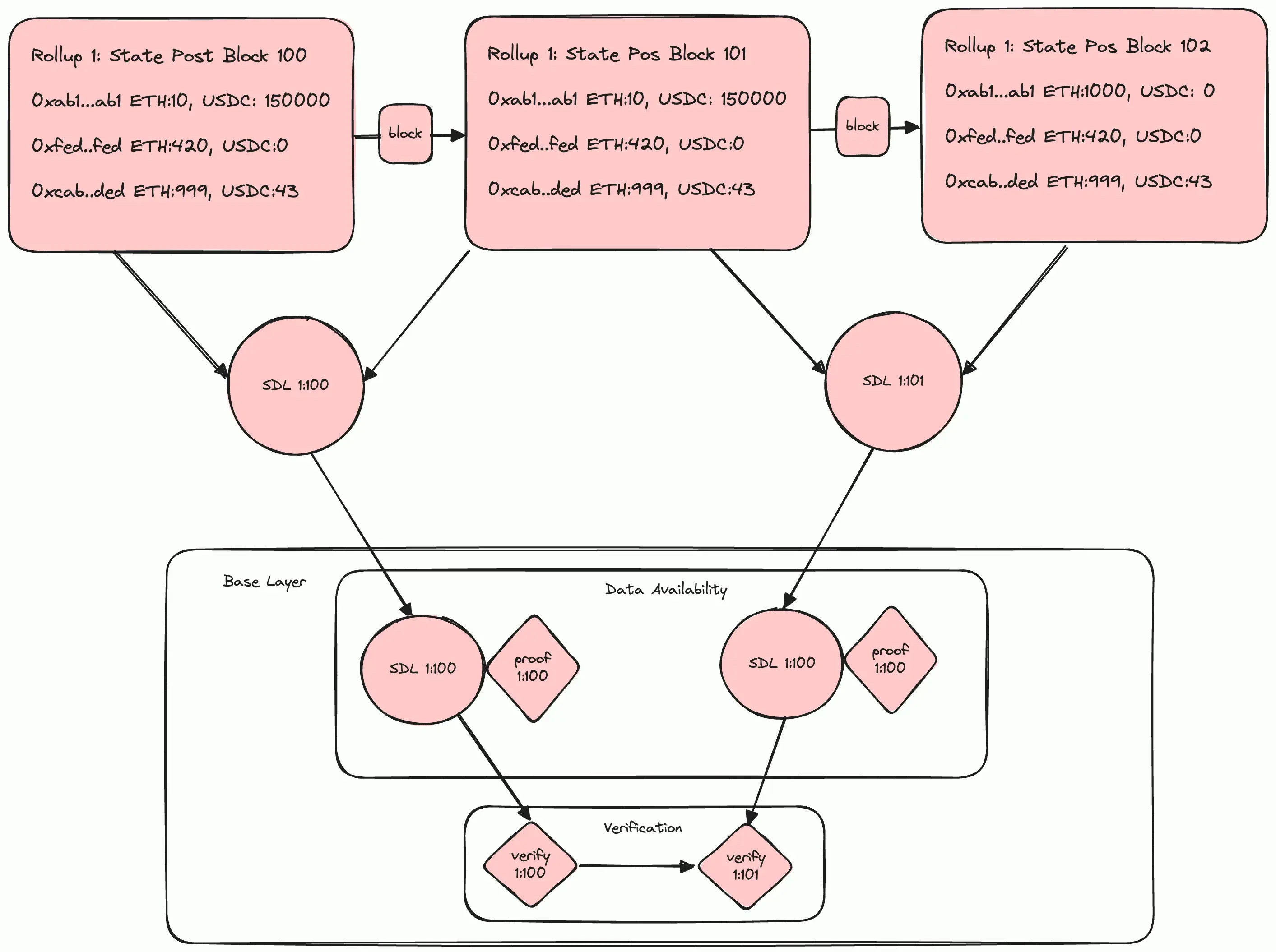

Two rollups posting state-diff lists and ZK proofs of their state transitions to the base layer.

NB: We assume the rollups post state-diff lists along with a ZK proof of their rollup state transitions here.The core insight here is around the causal relationship between transactions and underpins a lot of the work done around Directed Acyclic Graph (DAG) based models for consensus. In general, DAG algorithms try to specifically point out dependencies so that computation/processing can happen in parallel. Borrowing from these ideas, we expect rollup base layers to emerge where consensus is largely relaxed in favor of higher throughput and lower latency.

The natural partitioning of rollup state suggests that forcing a total order across all rollup transactions may be an unnecessary overhead. Systems such as delta and Hylé leverage this insight by allowing rollups to progress independently, only requiring coordination for cross-domain asset transfers. However, this isn’t a complete elimination of consensus; rather, it’s a refinement of where consensus is actually needed. The innovation is in recognizing that this ordering can be localized to where it’s actually required, rather than imposed globally across all transactions.

The biggest impact of this partitioning is creating an elegant solution to rollups to increase throughput on specialized execution environments without sacrificing composability with other rollups.

Causal Ordering vs Total Ordering

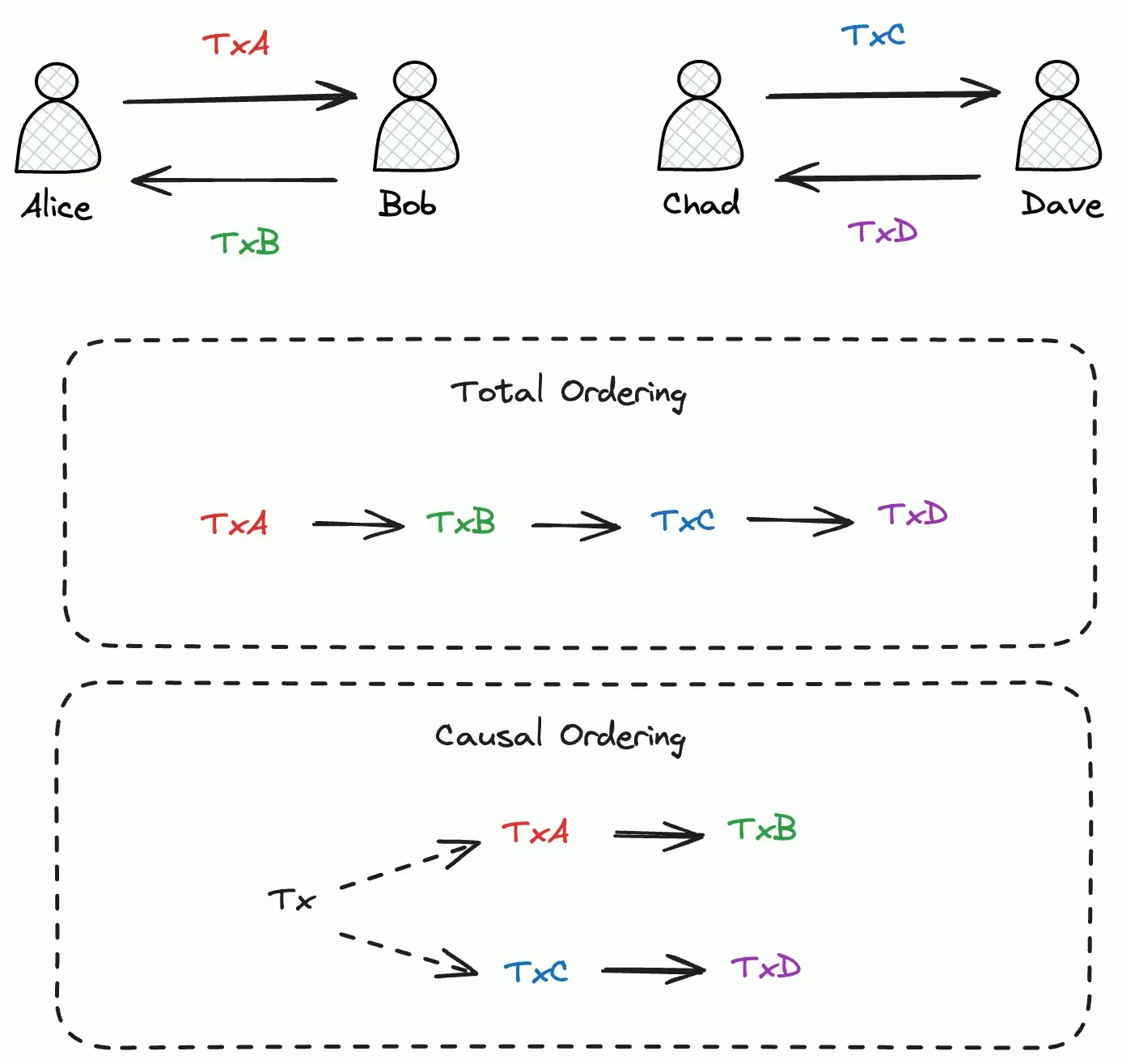

Before we go further, let’s take a step back and talk a bit more about ordering. Broadly speaking, consensus is the agreement of all nodes in the network on the ordering of valid transactions:

- Linear blockchains must agree on the total ordering of transactions, that is, the complete linear order of events as they have occurred in the view of all participating nodes. Transactions that have nothing to do with each other are still placed neatly into a global ordering.

- Causal ordering on the other hand is simply the ordering of transactions such that the transactions that happened previously are ordered before those that rely on their outputs. Transactions that are not causally related do not need to be ordered relative to each other. This is also known as partial ordering. DAGs are simply data structures that implement partial ordering across a set of transactions. Partial ordering also opens up the door for parallel transaction execution across disjoint parts of a DAG. Here, there is no single, global ordering of transactions that all nodes agree on.

Total ordering can be built on top of a DAG. It requires additional consensus mechanisms to agree on the order of concurrent events. An example of this is the Narwhal And Tusk protocol or a more recent evolution in Sui’s Mysticeti.

Causal ordering of transactions.

Transactions within a DAG are able to be confirmed independently of other unrelated transactions. Once a transaction has obtained quorum from a majority of validators, it is considered valid. Allowing transactions to be confirmed individually instead of within a block can drive a large increase in transaction throughput, as many transactions can be proposed and confirmed in parallel. This can be thought of as a generalization of single-leader based consensus where any validator can propose a new transaction (NB: this can also be perceived as proposing a block with one solitary transaction).

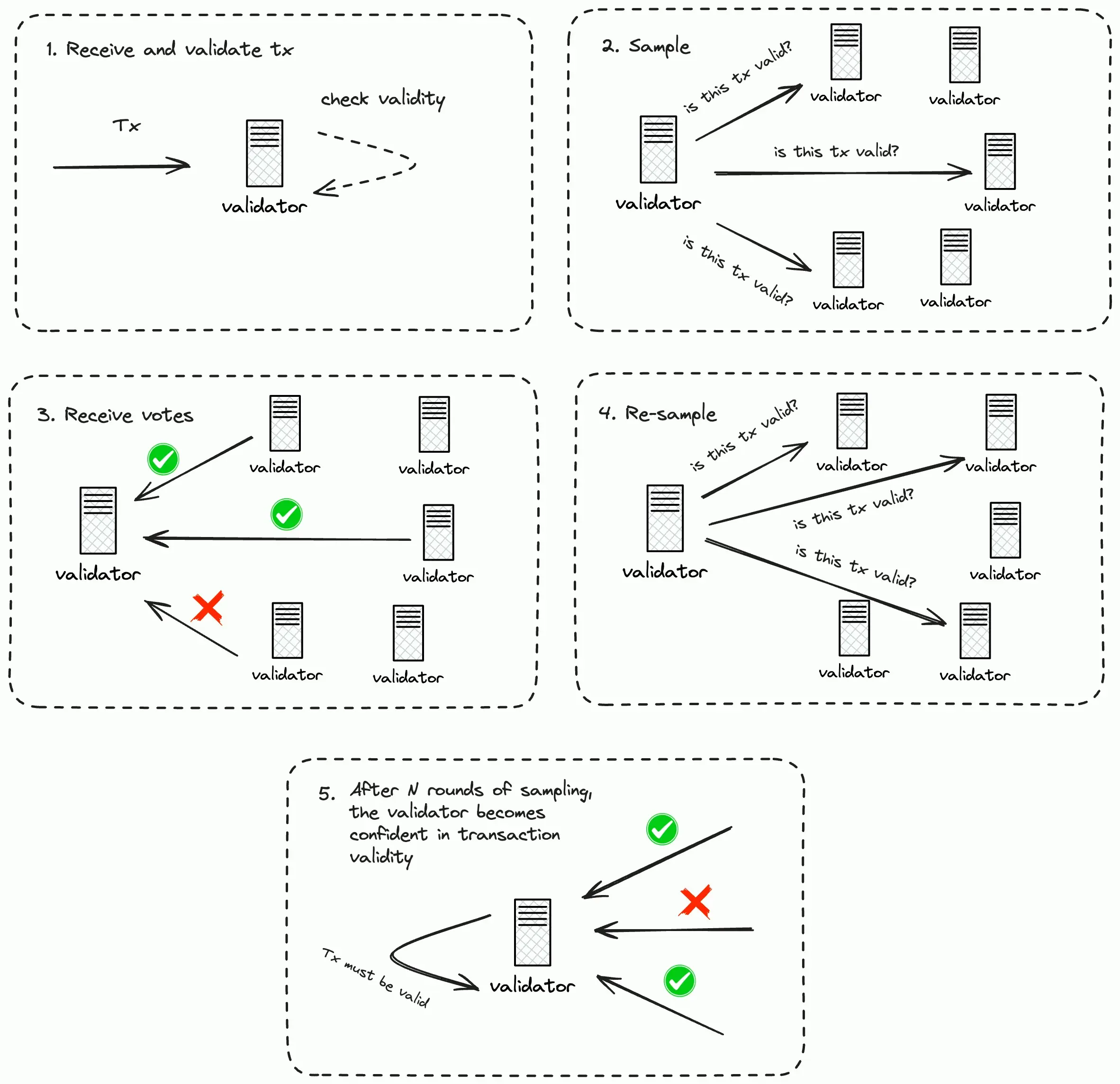

To summarize how transaction validation works in a DAG:

- A user broadcasts transactions to a subset of validator nodes.

- When a node receives a transaction, it first checks if it conflicts with any transactions it currently knows about, based on its local view of the graph.

- If there is a conflict, such as attempting to spend the same funds, the transaction is rejected.

- If there is no conflict, the receiving node will interact with other nodes in the network to achieve some form of agreement on the validity of the transaction. One such method is subsampling, wherein the node starts several rounds of querying by sampling a subset of other nodes and asking if they think this transaction is valid based on their own local view. If a threshold of sampled nodes respond positively, then the round of querying is considered successful and a quorum is said to have been reached. This sampling process is repeated until the node comes to confidence on transaction validity. This process allows nodes to quickly come to a probabilistic consensus on transaction validity without requiring global agreement. The repeated sampling helps ensure that a consensus emerges across the network, making it extremely unlikely for conflicting transactions to both be accepted.

Subsampling for transaction validation.

It is important to reiterate that any node at any given time can run this interactive process to achieve quorum, allowing for there to be multiple paths through which consensus progresses. In some sense, each validator or replica is running their own blockchain that synchronizes with the rest of the nodes periodically. This idea of progressing multiple different blockchains before reconciling them has also been explored in non-DAG based designs such as Autobahn (which still relies on the separation of data dissemination and ordering). In Autobahn, each validator maintains its own lane of transactions which are then reconciled during a sync process. While they aren’t explicitly referred to as blockchains in the paper, we believe lanes are very close approximations to blockchains and the sync process is akin to merging multiple blockchains.

Causality in Base Layers

Now, given we understand the concept of causality, we can attempt to piece together how this concept becomes relevant for base layers. As previously mentioned, rollups normally post state data or state-diff lists that correspond to state updates on their own persistent partitioned state. Data posted by two rollups would have no contention over some “hot state” due to the data being completely disjoint from each other. This relaxes the need for global ordering in the base layer. Moreover, for new rollup state to be verified, only the previously posted rollup state must be verified. As such the base layer is free to order these rollup transactions in a way where they are able to progress independently of one another and do not need to wait to be globally ordered:

A base layer with causal ordering.

Zooming in, rollups should freely be able to post data and proofs to the base layer without worrying about fees. As the data propagates through the network, validators on the base layer would verify the proofs posted by rollup sequencers. If a quorum of validators verify the proof, then the transaction is assumed to be confirmed. Such a system would allow rollups to achieve confirmation at the speed at which data is disseminated through the base layer. This should also theoretically reduce the time between sequencer preconfirmations and confirmations from the base layer.

A single rollup posting state-diff lists and ZK proofs of its state transitions to the base layer.

The above system hinges on ZK-based execution sharding instead of replicated execution being the future of verifiable applications.

Cross-shard transactions which move data between two rollups would need ordering but this would also be partial. For example, a transfer of asset X from rollup A to rollup B would require that the withdrawal transaction from rollup A reaches a quorum before rollup B can include the deposit transaction. Fast confirmations from the base layer would provide credible guarantees for interoperability between rollups in the same ecosystem, creating network effects for the base layer. Fast interoperability combined with a large set of valuable assets might be enough to make a base layer attractive to prospective rollups. All in all, such a specialized design would allow:

- Fast confirmations times for rollup transactions.

- Fast interoperability between rollups (without the need for liquidity bridges or market makers).

- Specialized DA throughput for rollups.

- Specialized verification tooling (more proving systems) for rollups.

Brief aside: value accrual to base asset

The above discussion provides a sketch for a cheap, fast and secure base layer for rollups. However, much of the current discourse surrounding the rollup-centric roadmap revolves around the value accrual to ETH and Ethereum in the presence of rollups. L2s such as Base who own user relationships are able to charge a premium for their blockspace and only have to pay a fraction of their revenue back to Ethereum in the form of DA fees.

By allowing rollups to achieve fast interoperability by posting state data more often, base layers can claim some of the revenue otherwise lost to market makers and liquidity bridges. Albeit the amount of value a better interoperability system accrues to base layers depends completely on the number of rollups that need to communicate with each other. In a setup where rollups don’t cater to multiple applications, the value accrual for the base layer becomes much clearer. The applications would simply interact with each other using the base layer for composability. Applications get the benefit of high throughput and control over their own space, without sacrificing composability.

There are also some arguments for improving execution on the base layer as a way to improve value accrual to the native token. This effectively allows the base layer to compete with rollups going against the rollup-centric design primer. One other way (and possibly our preferred way) to include execution would be to build enshrined rollups wherein the base layer asset secures the rollup sequencer through restaking. The base layer validator set can even act as the sequencer set for the rollup if need be (although the validator set need not be the same). In fact, the topic of enshrined or native rollups has picked up steam after Martin Köppelmann’s talk at Devcon 2024. For an ecosystem such as Ethereum, it would allow ETH to earn back some lost value whilst also allowing developers to experiment more freely on the rollup where the stakes are likely going to be much lower than Ethereum L1.

Conclusion

In general, we think the ZK-era represents a really exciting and forward-looking future for Ethereum and for blockchains in general. In this post we outlined how the combination of ZK with state-of-the-art consensus represents a potential new direction for base layers in rollup-centric systems. By combining zero-knowledge proofs with borrowed ideas from DAG-based consensus mechanisms, we can reimagine base layers that are truly optimized for rollups. Consensus is applied sparingly only to where state is actually shared, rather than as a blanket requirement across all operations. As the ecosystem continues to evolve toward modular designs, we expect this more nuanced approach to base layer consensus to become a standard across modular blockchains.

In general, we believe that given several new pieces of enabling technology are just hitting production, base layers must incoporate this technology to remain competitive.

All in all, we mustn’t be afraid to dream a little bigger.